Streaming and Batching Data Processing

tags:

- data-engineering

- software-engineering

- cloud

- data-processing

- batching

- streaming

Introduction to Data Processing Paradigms

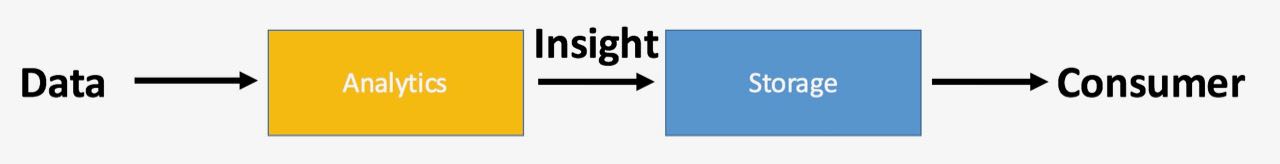

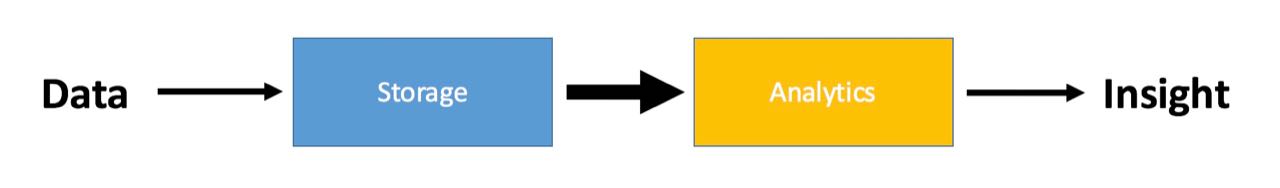

What is Data Pipeline? Building a data warehouse pipeline can be complex sometimes. If you are starting in this world, you will soon realize there is no right or wrong way to do it. It always depends on your needs.

Yet, there are a couple of basic processes you should put in place when building a data pipeline to improve its operability and performance. This document will be used to share you with a roadmap that can help as a guide when building a data warehouse pipeline. This roadmap is intended to help people to implement DataOps when building a data warehouse pipeline through a set of processes.

In the roadmap section we talk about five processes that should be implemented to improve your data pipeline operability and performance — Orchestration, Monitoring, Version Control, CI/CD, and Configuration Management. Some of the data warehousing terminology — e.g., data lakes, data warehouses, batching, streaming, ETL, ELT, and so on.

Data Pipeline Roadmap: There are five processes we recommend you should put in place to improve your data pipeline operability and performance. These processes are 1.Orchestration, 2.Monitoring, 3.Version Control, 4.CI/CD, and 5.Configuration Management.

Focussing:

- Understanding the difference between streaming and batching data processing.

- Overview of common streaming frameworks (e.g., Apache Kafka, Apache Flink) and batch processing frameworks (e.g., Apache Spark, Apache Beam).

- Discussing the advantages and disadvantages of each approach.

Choosing Between Stream and Batch Processing

-

Latency Requirement: Determine the urgency of insights required; real-time needs favor streaming while batch processing offers delayed but comprehensive results.

-

Data Volume and Frequency: Consider the volume and velocity of incoming data; streaming is ideal for continuous streams, while batch suits larger, accumulated datasets.

-

Use Case Scenario: Identify if immediate action or continuous monitoring is essential (streaming) or if historical analysis and reporting are the primary goals (batch).

-

Infrastructure and Resources: Assess your system’s capabilities to handle real-time data processing (streaming) or batch processing’s resource requirements.

Streaming Data Processing

- Exploring the characteristics of streaming data and its challenges.

- Introduction to stream processing concepts such as windowing, watermarking, and event time processing, late-event handling.

- Understanding fault tolerance and exactly-once processing in streaming frameworks.

Building a real-time data pipeline using Apache Kafka and Apache Flink/Beam.

Writing a simple data producer to generate streaming data.

Python function to generate the streaming data to Kafka topic

Creating a basic Spark job to process batch data. This will using Python to consume data from Kafka topic payment_msg.

def produce_to_kafka(topic_name, bootstrap_servers='localhost:9092'):

producer_conf = {

'bootstrap.servers': bootstrap_servers,

'client.id': 'python-producer'

}

producer = Producer(producer_conf)

try:

while True:

payment_msg = generate_fake_payment()

payment_serialized_msg = json.dumps(payment_msg).encode('utf-8')

producer.produce(topic_name, payment_serialized_msg, callback=delivery_report)

time.sleep(20)

except KeyboardInterrupt:

print("KeyboardInterrupt: Stopping Kafka producer")

finally:

producer.flush()

producer.close()

Note: Make sure you had install Spark on your local machine Implementing windowed operations on streaming data. Handling late events and out-of-order data in a streaming pipeline.

Batch Data Processing

Overview of batch data processing concepts and its applications. Understanding batch processing workflows and data partitioning strategies. Discussing optimization techniques for batch processing jobs.

Developing batch processing jobs using Apache Spark.

Implementing common transformations and actions on batch data.

Optimizing Spark jobs for performance and scalability.

from pyspark.sql import SparkSession

from pyspark.sql.types import *

spark = SparkSession.builder \

.appName("Spark Snowflake Connector") \

.config("spark.jars", "snowflake-jdbc-3.13.16.jar, \

spark-snowflake_2.12-2.9.0-spark_3.1.jar") \

.getOrCreate()

sfOptions = {

"sfURL" : "<hostname>.snowflakecomputing.",

"sfUser" : "",

"sfPassword" : "",

"sfDatabase" : "",

"sfSchema" : "",

"sfWarehouse" : "",

}

jdbc_url = f"jdbc:snowflake://{sfOptions['sfURL']}/"

df = spark.read \

.format("snowflake") \

.options(**sfOptions) \

.option("query", "SELECT COUNT(1) FROM WAREHOUSE.PUBLIC.WEB_EVENTS") \

.load()

df.show()

Alternatively with SaaS and Low-Code tool, we can use Mage AI for batch processing jobs, it supports batch, stream, cron, orchestrate pipeline

Integrating Streaming and Batch Processing

Exploring hybrid data processing architectures combining streaming and batch processing. Discussing use cases for integrating streaming and batch processing pipelines. Introduction to lambda architecture and kappa architecture.

Lambda architecture

Consists of three layers: batch layer, speed layer, and serving layer12. The batch layer is responsible for processing historical data using batch processing techniques, such as MapReduce or Spark. The speed layer is responsible for processing streaming data using stream processing techniques, such as Storm or Flink. The serving layer is responsible for providing a unified view of the data by combining the results from the batch and speed layers12.

Kappa architecture

Simplifies the lambda architecture by removing the batch layer and using only the stream processing layer13. In kappa architecture, all data is treated as a stream, regardless of whether it is historical or real time. The stream processing layer can handle both batch and stream processing tasks by replaying the data from the beginning or from a specific point in time13.

Comparing Lambda and Kappa architectures

| Advantages | Disadvantages | |

|---|---|---|

| Lambda architecture | high accuracy, high completeness, scalable, fault-tolerant, flexible, adaptable | complexity, cost, required de-coupling, latency, inconsistency, take to to sync batch and speed data |

| Kappa architecture | simple, single source of truth, fast, consistent,realtime, near-realtime data, low latency | required expertise, exp with streaming in distributed sys, event ordering and tolerance, not for lake house solution or any batching processing |

Designing and implementing a lambda architecture for real-time analytics.

Developing connectors to bridge streaming and batch processing systems.

- Testing and monitoring the integrated data processing pipeline.

Is it correct to understand Realtime equally means Streaming?

Common: Real-time and streaming technologies are both used to transmit data continuously over the internet, but they have different characteristics and use cases.

Different:

| Aspect | Real-Time | Streaming |

|---|---|---|

| Definition | Immediate processing and transmission of data | Continuous transmission of data for playback |

| Latency | Very low latency, almost immediate | Slightly higher latency due to buffering |

| Interactivity | High interactivity, suitable for two-way communication | Lower interactivity, typically one-way consumption |

| Buffering | Minimal to no buffering | Uses buffering to ensure smooth playback |

| Examples | Video conferencing, online gaming, live broadcasting | Video on demand, music streaming, live streaming services |

| Use Cases | Telemedicine, financial trading systems, live event coverage | Entertainment, media consumption, educational content delivery |

In general, while both technologies are used for continuous data transmission, real-time focuses on immediate processing and interaction with minimal delay, making it suitable for interactive applications. Streaming focuses on delivering a continuous flow of data for consumption, with some buffering to ensure smooth playback, making it ideal for media and content delivery.

Advanced Topics and Project Work

Deep dive into advanced topics such as stateful stream processing, complex event processing, and stream-to-stream joins. Overview of deployment strategies for data processing applications.

Working on a capstone project to apply the concepts learned throughout the bootcamp.

Collaborating in teams to design and implement a data processing solution for a real-world scenario.

Presenting the project to peers and receiving feedback for improvement.

Summary

- What is streaming and batching?

- What is the upside of streaming vs batching?

- Use-cases of batching and streaming

- Batching and micro-batch processing

- Can you sync the batch and streaming layer and if yes how?

- Data reconciliation is done when data had migrated from on-prem to cloud storage

- Handling data was changing during the migration

- Process Dimension data loading process

- Difference between batch and stream processing

Setting up a local development environment with Apache Kafka and Apache Spark.

Using python virtual environment or directly install on the machine, check the requirement, pip install -r requirements.txt

Using docker to create and deploy everything

Writing a simple data producer to generate streaming data, Python function to generate the streaming data to Kafka topic

- Payment Producer produces data to Kafka topic Python Payment Producer

- userClick Producer produces data to Kafka topic Python User Click Producer